13 Case Studies

In this chapter, we’ll explore comprehensive case studies that demonstrate intentional prompting principles applied to real-world programming scenarios. These case studies illustrate how the six-step methodology and intentional prompting techniques come together to solve complex problems effectively.

13.1 Case Study 1: Building a Data Analysis Pipeline

13.1.1 The Problem

A research team needs to analyze a large dataset of customer transactions to identify purchasing patterns. They have a CSV file with millions of records containing transaction data including:

- Transaction ID

- Customer ID

- Date and time

- Product categories

- Purchase amount

- Payment method

- Store location

The team needs to: 1. Clean and preprocess the data 2. Identify the most frequently purchased product combinations 3. Analyze how purchasing patterns change over time 4. Visualize the results

13.1.2 The Intentional Prompting Approach

13.1.2.1 Step 1: Restate the Problem

Human prompt:

I need to build a data analysis pipeline to process customer transaction data. Let me restate the problem:

We have a large CSV file (millions of rows) with transaction data including transaction ID, customer ID, date/time, product categories, purchase amount, payment method, and store location.

We need to:

1. Clean the data (handle missing values, standardize formats)

2. Find frequently purchased product combinations

3. Analyze how purchasing patterns change over time

4. Create visualizations of the results

Is this understanding correct, and are there any important aspects I've missed?AI response: > Your understanding is correct! Your restatement captures the main requirements of building a data analysis pipeline for transaction data. Here are a few additional considerations that might be important: > > 1. Data volume handling: With millions of rows, you’ll need efficient processing techniques that can handle large datasets. This might involve streaming processing, chunking the data, or using distributed computing frameworks. > > 2. Performance considerations: Some operations like finding product combinations might be computationally expensive. You might need optimization strategies. > > 3. Data privacy: Transaction data may contain sensitive information. You might need to anonymize or handle the data according to relevant privacy regulations. > > 4. Output format: It’s worth considering what format the final results should be in (static reports, interactive dashboards, raw data for further analysis, etc.). > > 5. Repeatability: Will this be a one-time analysis or something that needs to be run regularly? If it’s the latter, the pipeline should be designed for automation and reproducibility. > > 6. Data quality assessment: Beyond basic cleaning, you might want to include statistical analysis to identify outliers or anomalies that could skew the results. > > With these considerations in mind, your restatement effectively captures the core of the problem.

13.1.2.2 Step 2: Identify Input and Output

Human prompt:

Let me define the specific inputs and outputs for this pipeline:

INPUTS:

- A CSV file with the following columns:

* transaction_id (string): Unique identifier for each transaction

* customer_id (string): Identifier for the customer

* timestamp (datetime): Date and time of the transaction

* product_categories (string): Comma-separated list of purchased product categories

* amount (float): Purchase amount in dollars

* payment_method (string): Method of payment

* store_location (string): Location identifier for the store

OUTPUTS:

1. Cleaned dataset (CSV or Parquet)

2. Top 20 most frequently purchased product combinations (CSV)

3. Monthly purchasing trends by product category (CSV)

4. Visualizations:

* Bar chart of top product combinations

* Line chart showing purchasing trends over time

* Heatmap of purchase patterns by day of week and hour

Do these specifications adequately cover what's needed for the pipeline?AI response: > Your input and output specifications provide a clear framework for the pipeline. They’re comprehensive and specific, which is excellent for guiding the implementation. Here are a few additional points to consider: > > For inputs: > > 1. File format details: You might want to specify if there are any header rows, quoting patterns, or delimiter specifications for the CSV. > > 2. Expected data sizes: Approximate size of the file (GB) and typical row count can help plan for resource requirements. > > 3. Data quality expectations: Are there known issues with the data? (e.g., are missing values expected in certain columns, are there known inconsistencies in formatting) > > For outputs: > > 1. Definition of “product combinations”: Clarify whether this means products purchased together in a single transaction, or products frequently purchased by the same customer across different transactions. > > 2. Granularity of time analysis: You specified monthly trends, but you might also want daily or weekly analysis depending on the business needs. > > 3. Additional metrics: Consider adding: > - Average basket size (number of items and monetary value) > - Payment method distribution > - Store location performance comparison > > 4. Output file specifications: For the CSV outputs, specify whether headers should be included and what the column structure should be. > > 5. Visualization formats: Specify the file formats for visualizations (PNG, PDF, interactive HTML, etc.) > > Overall, your specifications are solid and provide a good foundation for the implementation. These additional considerations can help make the requirements even more precise.

13.1.2.3 Step 3: Work by Hand

In this step, we’d manually process a small sample of the data to understand the analysis process. For brevity, we’ll focus on a simple example of finding product combinations.

Human work:

Sample data (5 transactions):

transaction_id,customer_id,timestamp,product_categories,amount,payment_method,store_location

T1001,C1,2023-01-15 14:30:00,"electronics,accessories",120.50,credit,store1

T1002,C2,2023-01-15 15:45:00,"groceries,dairy,produce",65.75,debit,store2

T1003,C1,2023-01-17 10:15:00,"electronics,videogames",210.99,credit,store1

T1004,C3,2023-01-18 09:30:00,"dairy,produce,meat",95.30,cash,store2

T1005,C2,2023-01-20 16:20:00,"groceries,dairy,bakery",45.60,debit,store2To find product combinations: 1. Split each transaction’s product_categories field 2. Generate all pair combinations 3. Count frequency of each pair

For T1001: [“electronics”, “accessories”] Pairs: [(“electronics”, “accessories”)]

For T1002: [“groceries”, “dairy”, “produce”] Pairs: [(“groceries”, “dairy”), (“groceries”, “produce”), (“dairy”, “produce”)]

…and so on for all transactions.

Counting frequencies: - (“dairy”, “produce”): 2 - (“groceries”, “dairy”): 2 - All others: 1

Most frequent combinations: (“dairy”, “produce”) and (“groceries”, “dairy”)

Human prompt:

I've worked through a small sample of the data manually to understand the process of finding product combinations. Here's what I did:

[The manual work shown above]

From this manual process, I can see that we need to:

1. Parse the comma-separated product categories

2. Generate all possible pairs from each transaction

3. Count occurrences of each pair across all transactions

4. Sort by frequency to find the most common combinations

This approach works for pairs, but for larger combinations, we might need association rule mining algorithms like Apriori. Does this manual approach seem correct, and are there any important considerations I'm missing?AI response: > Your manual approach for finding product combinations is sound! You’ve correctly identified the key steps for analyzing product pairs. Here are some important considerations and extensions: > > Validations and extensions: > > 1. Multiple levels of combinations: As you noted, beyond pairs, you might want to find triplets or larger combinations. Apriori or FP-Growth algorithms would indeed be appropriate for this. > > 2. Association metrics: Beyond just frequency count, consider calculating: > - Support (frequency of the combination relative to total transactions) > - Confidence (likelihood of Y when X is purchased) > - Lift (how much more likely Y is purchased when X is purchased, compared to normal) > > 3. Performance for large datasets: The naive approach of generating all combinations can be computationally expensive for transactions with many items. For the full dataset: > - Consider using optimized libraries like MLxtend or efficient implementations of Apriori > - You might need to set a minimum support threshold to filter out rare combinations early > > 4. Handling duplicates: If a customer buys multiple items in the same category in one transaction (e.g., two electronics items), your current approach correctly treats “electronics” as appearing once in that transaction. > > 5. Time-based analysis: You might want to see if popular combinations change over time (seasonal patterns, etc.) > > Your manual process demonstrates a good understanding of the approach. For implementation at scale, you’ll want to leverage existing libraries rather than building the combination generator from scratch, especially as you move beyond pairs to larger combinations.

13.1.2.4 Step 4: Write Pseudocode

Based on the manual exploration, we can now outline the solution in pseudocode.

Human prompt:

Based on my manual exploration, let me write pseudocode for the data analysis pipeline:

FUNCTION clean_data(input_file): data = READ_CSV(input_file)

# Handle missing values

FILL_MISSING(data.product_categories, "unknown")

FILL_MISSING(data.payment_method, "unknown")

FILL_MISSING(data.store_location, "unknown")

DROP_ROWS_WHERE(data.transaction_id IS NULL OR data.timestamp IS NULL)

# Standardize formats

data.timestamp = CONVERT_TO_DATETIME(data.timestamp)

data.amount = CONVERT_TO_FLOAT(data.amount)

RETURN dataFUNCTION find_product_combinations(data, min_support=0.001): # Initialize counter for product combinations combination_counts = EMPTY_COUNTER() total_transactions = COUNT_ROWS(data)

FOR each row IN data:

products = SPLIT(row.product_categories, ",")

product_combinations = GENERATE_COMBINATIONS(products, size=2)

FOR each combination IN product_combinations:

INCREMENT combination_counts[combination]

# Convert counts to support values

combination_support = EMPTY_DICT()

FOR combination, count IN combination_counts:

combination_support[combination] = count / total_transactions

# Filter by minimum support

frequent_combinations = FILTER(combination_support >= min_support)

# Sort by support (descending)

frequent_combinations = SORT_DESCENDING(frequent_combinations)

RETURN frequent_combinationsFUNCTION analyze_time_patterns(data): # Add temporal features data[‘month’] = EXTRACT_MONTH(data.timestamp) data[‘year’] = EXTRACT_YEAR(data.timestamp) data[‘day_of_week’] = EXTRACT_WEEKDAY(data.timestamp) data[‘hour’] = EXTRACT_HOUR(data.timestamp)

# Group by month and product categories

monthly_trends = GROUP_BY(data, ['year', 'month', 'product_categories'],

AGGREGATE(COUNT(transaction_id), SUM(amount)))

# Group by day of week and hour

hourly_patterns = GROUP_BY(data, ['day_of_week', 'hour', 'product_categories'],

AGGREGATE(COUNT(transaction_id)))

RETURN monthly_trends, hourly_patternsFUNCTION create_visualizations(product_combinations, monthly_trends, hourly_patterns): # Create bar chart of top combinations bar_chart = PLOT_BAR_CHART(TOP_N(product_combinations, 20))

# Create line chart of monthly trends

line_chart = PLOT_LINE_CHART(monthly_trends)

# Create heatmap of purchase patterns

heatmap = PLOT_HEATMAP(hourly_patterns)

RETURN bar_chart, line_chart, heatmapFUNCTION main(input_file, output_dir): # Clean data cleaned_data = clean_data(input_file) SAVE_CSV(cleaned_data, output_dir + “/cleaned_data.csv”)

# Find frequent product combinations

product_combinations = find_product_combinations(cleaned_data)

SAVE_CSV(TOP_N(product_combinations, 20), output_dir + "/top_combinations.csv")

# Analyze time patterns

monthly_trends, hourly_patterns = analyze_time_patterns(cleaned_data)

SAVE_CSV(monthly_trends, output_dir + "/monthly_trends.csv")

# Create visualizations

bar_chart, line_chart, heatmap = create_visualizations(

product_combinations, monthly_trends, hourly_patterns)

SAVE_PNG(bar_chart, output_dir + "/top_combinations.png")

SAVE_PNG(line_chart, output_dir + "/monthly_trends.png")

SAVE_PNG(heatmap, output_dir + "/hourly_patterns.png")

Does this pseudocode cover all the necessary steps for our data analysis pipeline? Are there any inefficiencies or improvements you would suggest?AI response: > Your pseudocode provides a good framework for the data analysis pipeline! It covers the main steps and follows a logical flow. Here are some suggestions for improvements and considerations: > > Efficiency improvements: > > 1. Chunked processing: For millions of rows, you might want to process the data in chunks to avoid memory issues: > > FUNCTION clean_data(input_file): > initialize empty dataframe for cleaned_data > FOR each chunk IN READ_CSV_IN_CHUNKS(input_file, chunk_size=100000): > # Apply cleaning operations to chunk > cleaned_chunk = CLEAN_CHUNK(chunk) > APPEND(cleaned_data, cleaned_chunk) > RETURN cleaned_data > > > 2. Optimized product combination generation: The current approach might be inefficient for transactions with many products: > > # Add a maximum combination size parameter > FUNCTION find_product_combinations(data, min_support=0.001, max_combination_size=2): > # Use an efficient algorithm like Apriori instead of manual combination generation > frequent_itemsets = APRIORI_ALGORITHM( > data.product_categories, > min_support=min_support, > max_length=max_combination_size > ) > RETURN frequent_itemsets > > > Additional considerations: > > 1. Data validation: Add checks for data quality: > > FUNCTION clean_data(input_file): > # ...existing code... > > # Add data validation > LOG_STATS(COUNT_MISSING_VALUES(data)) > LOG_STATS(COUNT_UNIQUE_VALUES(data.product_categories)) > VALIDATE_NUMERIC_RANGE(data.amount, min=0) > > # ...rest of function... > > > 2. Error handling: Add error handling for robust processing: > > FUNCTION main(input_file, output_dir): > TRY: > # ...existing code... > CATCH FileNotFoundError: > LOG_ERROR("Input file not found") > CATCH MemoryError: > LOG_ERROR("Insufficient memory, try reducing chunk size") > FINALLY: > CLEAN_UP_TEMP_FILES() > > > 3. Parallelization: For large datasets, consider parallel processing: > > FUNCTION find_product_combinations(data, min_support=0.001): > # Split data into partitions > partitions = SPLIT_DATA(data, num_partitions=NUM_CORES) > > # Process partitions in parallel > partial_results = PARALLEL_MAP(PROCESS_PARTITION, partitions) > > # Combine results > combined_results = COMBINE_PARTIAL_RESULTS(partial_results) > RETURN combined_results > > > 4. Progress tracking: For long-running operations, add progress tracking: > > FUNCTION clean_data(input_file): > total_chunks = ESTIMATE_TOTAL_CHUNKS(input_file, chunk_size) > FOR i, chunk IN ENUMERATE(READ_CSV_IN_CHUNKS(input_file)): > LOG_PROGRESS(f"Processing chunk {i}/{total_chunks}") > # ...process chunk... > > > These improvements would make your pipeline more robust, efficient, and suitable for large-scale data processing. Your current pseudocode is a great starting point, and these suggestions help address some common challenges in real-world data processing.

13.1.2.5 Step 5: Convert to Code

Now let’s implement the solution in Python, focusing on the product combination analysis.

Human prompt:

Let's convert the pseudocode for finding product combinations into Python code using pandas and mlxtend for the Apriori algorithm:

```python

import pandas as pd

from mlxtend.frequent_patterns import apriori, association_rules

from mlxtend.preprocessing import TransactionEncoder

import numpy as np

def find_product_combinations(data, min_support=0.001, max_len=3):

"""

Find frequent product combinations using the Apriori algorithm.

Args:

data (pd.DataFrame): DataFrame containing transaction data

min_support (float): Minimum support threshold (default: 0.001)

max_len (int): Maximum combination size (default: 3)

Returns:

pd.DataFrame: DataFrame with frequent itemsets and their support

"""

# Extract product categories and split into lists

transactions = data['product_categories'].str.split(',').tolist()

# Convert transactions to one-hot encoded format

te = TransactionEncoder()

te_ary = te.fit_transform(transactions)

df_encoded = pd.DataFrame(te_ary, columns=te.columns_)

# Apply Apriori algorithm

frequent_itemsets = apriori(

df_encoded,

min_support=min_support,

max_len=max_len,

use_colnames=True

)

# Filter itemsets with more than one item

frequent_itemsets = frequent_itemsets[frequent_itemsets['itemsets'].apply(lambda x: len(x) > 1)]

# Sort by support (descending)

frequent_itemsets = frequent_itemsets.sort_values('support', ascending=False)

# Format the output

result = pd.DataFrame({

'combination': frequent_itemsets['itemsets'].apply(lambda x: ', '.join(list(x))),

'support': frequent_itemsets['support'],

'transaction_count': (frequent_itemsets['support'] * len(data)).astype(int)

})

return result

def clean_data(file_path, chunk_size=100000):

"""

Clean and preprocess the transaction data.

Args:

file_path (str): Path to the CSV file

chunk_size (int): Number of rows to process at once

Returns:

pd.DataFrame: Cleaned and preprocessed data

"""

# Initialize an empty list to store chunks

chunks = []

# Process file in chunks

for chunk in pd.read_csv(file_path, chunksize=chunk_size):

# Fill missing values

chunk['product_categories'] = chunk['product_categories'].fillna('unknown')

chunk['payment_method'] = chunk['payment_method'].fillna('unknown')

chunk['store_location'] = chunk['store_location'].fillna('unknown')

# Drop rows with missing essential data

chunk = chunk.dropna(subset=['transaction_id', 'timestamp'])

# Standardize formats

chunk['timestamp'] = pd.to_datetime(chunk['timestamp'])

chunk['amount'] = pd.to_numeric(chunk['amount'], errors='coerce')

# Append the cleaned chunk

chunks.append(chunk)

# Combine all chunks

cleaned_data = pd.concat(chunks, ignore_index=True)

return cleaned_dataIs this implementation correct and efficient? Are there any issues or optimizations I should consider?

**AI response:**

> Your implementation is solid and follows good practices for processing large datasets! Here are some thoughts and potential optimizations:

>

> **Strengths:**

>

> 1. Using `chunksize` for reading large CSV files is excellent

> 2. The `mlxtend` library is a good choice for implementing Apriori efficiently

> 3. The code is well-structured with clear documentation

> 4. You've added appropriate data cleaning steps

>

> **Potential improvements:**

>

> 1. **Memory optimization for very large datasets:**

>

> ```python

> def find_product_combinations(data, min_support=0.001, max_len=3):

> # For extremely large datasets, we might want to sample first

> if len(data) > 10_000_000: # Arbitrary threshold

> data = data.sample(n=10_000_000, random_state=42)

> print(f"Data sampled to {len(data)} rows due to size")

>

> # Rest of your function...

> ```

>

> 2. **Error handling and logging:**

>

> ```python

> def clean_data(file_path, chunk_size=100000):

> chunks = []

> total_rows = 0

> invalid_rows = 0

>

> try:

> for i, chunk in enumerate(pd.read_csv(file_path, chunksize=chunk_size)):

> initial_rows = len(chunk)

> total_rows += initial_rows

>

> # Your cleaning code...

>

> invalid_rows += initial_rows - len(chunk)

> chunks.append(chunk)

>

> if i % 10 == 0:

> print(f"Processed {i+1} chunks ({total_rows} rows)...")

>

> print(f"Cleaning complete. Processed {total_rows} rows, removed {invalid_rows} invalid rows.")

> except Exception as e:

> print(f"Error during data cleaning: {str(e)}")

> raise

> ```

>

> 3. **Performance optimization for Apriori on sparse data:**

>

> ```python

> # If your dataset has many distinct products but each transaction has few,

> # use sparse representation for better performance

> from scipy.sparse import csr_matrix

>

> te_ary = te.fit_transform(transactions)

> sparse_te_ary = csr_matrix(te_ary)

>

> # Then use the sparse matrix with apriori if mlxtend supports it

> # Otherwise, for very sparse data, consider alternative implementations

> ```

>

> 4. **Data validation before processing:**

>

> ```python

> def find_product_combinations(data, min_support=0.001, max_len=3):

> # Validate input data

> if 'product_categories' not in data.columns:

> raise ValueError("DataFrame must contain 'product_categories' column")

>

> # Check for empty values post-cleaning

> empty_cats = data['product_categories'].isin(['', 'unknown']).sum()

> if empty_cats > 0:

> print(f"Warning: {empty_cats} rows have empty or unknown product categories")

>

> # Rest of your function...

> ```

>

> 5. **Consider adding time-based analysis:**

>

> ```python

> def find_seasonal_combinations(data, time_period='month'):

> """Find how product combinations vary over time."""

> # Add time period column

> if time_period == 'month':

> data['period'] = data['timestamp'].dt.to_period('M')

> elif time_period == 'quarter':

> data['period'] = data['timestamp'].dt.to_period('Q')

>

> periods = data['period'].unique()

> results = {}

>

> for period in periods:

> period_data = data[data['period'] == period]

> period_combos = find_product_combinations(period_data)

> results[period] = period_combos.head(10) # Top 10 for each period

>

> return results

> ```

>

> Overall, your implementation is well-designed for the task at hand. These suggestions are refinements that might be helpful in specific scenarios, but your core approach is sound!

#### Step 6: Test with Data

For this step, we would test our implementation with real data, starting with a small sample and progressively increasing to the full dataset.

**Human prompt:**Let’s test our implementation with a small sample dataset first:

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

# Create sample data

np.random.seed(42)

n_samples = 1000

# Generate transaction IDs

transaction_ids = [f'T{i+1:04d}' for i in range(n_samples)]

# Generate customer IDs (100 unique customers)

customer_ids = [f'C{np.random.randint(1, 101):03d}' for _ in range(n_samples)]

# Generate timestamps (last 90 days)

base_date = datetime.now() - timedelta(days=90)

timestamps = [base_date + timedelta(days=np.random.randint(0, 90),

hours=np.random.randint(0, 24),

minutes=np.random.randint(0, 60))

for _ in range(n_samples)]

# Product categories

categories = ['electronics', 'accessories', 'clothing', 'shoes', 'books',

'groceries', 'dairy', 'produce', 'meat', 'bakery', 'beverages',

'snacks', 'beauty', 'health', 'kitchen', 'toys', 'sports',

'automotive', 'garden', 'furniture']

# Generate product categories (1-4 categories per transaction)

product_categories = []

for _ in range(n_samples):

n_cats = np.random.randint(1, 5)

cats = np.random.choice(categories, size=n_cats, replace=False)

product_categories.append(','.join(cats))

# Generate amounts

amounts = np.random.uniform(10, 500, n_samples).round(2)

# Payment methods

payment_methods = np.random.choice(['credit', 'debit', 'cash', 'mobile'],

size=n_samples,

p=[0.4, 0.3, 0.2, 0.1])

# Store locations

store_locations = np.random.choice(['store1', 'store2', 'store3', 'online'],

size=n_samples,

p=[0.3, 0.3, 0.2, 0.2])

# Create DataFrame

sample_data = pd.DataFrame({

'transaction_id': transaction_ids,

'customer_id': customer_ids,

'timestamp': timestamps,

'product_categories': product_categories,

'amount': amounts,

'payment_method': payment_methods,

'store_location': store_locations

})

# Save to CSV

sample_data.to_csv('sample_transactions.csv', index=False)

# Test our implementation

# 1. Find product combinations

results = find_product_combinations(sample_data, min_support=0.01, max_len=3)

print(f"Found {len(results)} frequent product combinations")

print("\nTop 10 product combinations:")

print(results.head(10))

# 2. Check for issues or unexpected results

print("\nData statistics:")

print(f"Total transactions: {len(sample_data)}")

print(f"Unique product categories: {len(set(','.join(product_categories).split(',')))}")

unique_combos = set()

for cats in product_categories:

cat_list = cats.split(',')

for i in range(len(cat_list)):

for j in range(i+1, len(cat_list)):

unique_combos.add(tuple(sorted([cat_list[i], cat_list[j]])))

print(f"Unique product pairs in data: {len(unique_combos)}")What issues might we encounter when scaling to the full dataset, and how should we address them?

**AI response:**

> Your test approach with synthetic data is excellent for validating the implementation! Here are the potential issues you might encounter when scaling to the full dataset and strategies to address them:

>

> **Scaling Issues and Solutions:**

>

> 1. **Memory Consumption**

> - **Issue**: The TransactionEncoder creates a one-hot encoded matrix which can be extremely memory-intensive for datasets with millions of transactions and many unique products.

> - **Solution**:

> - Process in batches based on time periods (e.g., analyze one month at a time)

> - Use sampling techniques for initial analysis

> - Implement a more memory-efficient version of Apriori or consider alternatives like FP-Growth

> - Use sparse matrix representations where possible

>

> 2. **Computation Time**

> - **Issue**: Apriori's performance degrades significantly with low support thresholds on large datasets.

> - **Solution**:

> - Start with higher minimum support values and gradually decrease

> - Use parallel processing libraries (e.g., Dask, PySpark)

> - Implement an incremental approach that processes data in chunks and merges results

> - Consider using GPU-accelerated implementations for very large datasets

>

> 3. **Long Tail of Infrequent Items**

> - **Issue**: With millions of transactions, you might get thousands of combinations with low support.

> - **Solution**:

> - Focus on combinations with higher lift or confidence scores

> - Implement filtering for statistically significant associations

> - Group similar products into categories for higher-level analysis

>

> 4. **Data Quality at Scale**

> - **Issue**: Large datasets often have more varied quality issues that become apparent only at scale.

> - **Solution**:

> - Implement more robust data cleaning steps

> - Add comprehensive logging of anomalies

> - Create data quality dashboards to monitor issues

>

> 5. **Time-Based Changes**

> - **Issue**: Product associations may change over time, and analyzing everything together might obscure patterns.

> - **Solution**:

> - Implement time-window analysis

> - Compare associations across different time periods

> - Look for emerging or declining associations

>

> **Implementation Improvements for Scale:**

>

> ```python

> # For very large datasets, consider this approach:

>

> def analyze_large_dataset(file_path, output_dir):

> # 1. First pass: Get data statistics and sample

> print("First pass: Collecting statistics...")

> stats = collect_data_stats(file_path)

>

> # 2. Determine optimal processing approach based on stats

> if stats['total_rows'] > 10_000_000:

> print("Very large dataset detected. Using distributed processing...")

> # Initialize Dask or Spark

> import dask.dataframe as dd

> ddf = dd.read_csv(file_path)

> # Process with distributed framework

> else:

> print("Processing with pandas...")

> # Process with your current approach

>

> # 3. Use progressive sampling for quick insights

> sample_sizes = [10000, 100000, 1000000]

> for size in sample_sizes:

> if size > stats['total_rows']:

> continue

> print(f"Analyzing sample of {size} transactions...")

> sample = get_stratified_sample(file_path, size)

> # Run analysis on progressively larger samples

> results = find_product_combinations(sample)

> save_results(results, f"{output_dir}/sample_{size}_results.csv")

>

> # 4. Full analysis with optimized parameters

> print("Running full analysis with optimized parameters...")

> # Use parameters learned from sample analysis

> full_results = process_in_time_windows(file_path)

>

> return full_results

> ```

>

> Your test setup is well-designed. When scaling to millions of rows, implementing these strategies will help manage both memory consumption and computation time while still extracting valuable insights from the data.

### Lessons Learned

This case study demonstrates several key intentional prompting principles:

1. **Thorough problem restatement** helped identify additional considerations beyond the basic requirements

2. **Detailed input/output specification** clarified the exact needs of the pipeline

3. **Manual exploration** of a small data sample provided crucial insights into the required algorithms

4. **Pseudocode development** allowed for structural planning before implementation

5. **Implementation with AI assistance** incorporated best practices and optimizations

6. **Testing with synthetic data** verified the approach before scaling to the full dataset

The intentional prompting methodology led to a solution that was:

- Efficient for large-scale data processing

- Robust to data quality issues

- Flexible enough to handle various analysis requirements

- Well-tested before deployment to the full dataset

## Case Study 2: Building a Weather Dashboard

### The Problem

A developer needs to build a weather dashboard application in Python. The application should:

1. Utilize a weather data module to retrieve current and forecast information

2. Process and display weather data in a user-friendly interface

3. Allow users to search for different locations

4. Provide visualizations of weather trends

### The Intentional Prompting Approach

#### Step 1: Restate the Problem

**Human prompt:**I need to create a weather dashboard in Python that uses a provided module to access weather data and displays it in a user-friendly interface. Can you help me restate this problem more clearly to ensure I understand all requirements?

**AI response:**

> This problem involves creating a Python application that:

> 1. Utilises an existing weather data module to retrieve weather information

> 2. Processes that data appropriately

> 3. Presents the information in a clear, user-friendly interface

> 4. Allows users to interact with the dashboard to view different weather metrics

>

> The key components you'll need to develop are:

> - Functions to retrieve and process data from the provided module

> - A display system (likely console-based) to show weather information

> - User interaction components to allow navigation and selection

> - Visualisation elements for presenting weather data graphically

**Human follow-up prompt (clarification):**That’s helpful. Could you help me understand what types of weather data are typically available through weather APIs, and what visualisations might be most useful for users? This will help me plan my dashboard layout.

#### Step 2: Identify Input and Output Requirements

**Human prompt:**Based on our discussion, let me identify the specific inputs and outputs for my weather dashboard:

Inputs: - Location information (city name or coordinates) - Type of weather data requested (current, forecast) - Time period for forecast data

Outputs: - Current weather conditions display - Visual representations of temperature, humidity, etc. - Forecast for upcoming days - User navigation menu

Does this cover the essential inputs and outputs? Is there anything important I’m missing?

**AI response:**

> Your input/output identification is strong! A few additional considerations:

>

> Additional inputs to consider:

> - User preferences for temperature units (Celsius/Fahrenheit)

> - Display preferences (text-only vs. graphical visualisation)

> - Refresh rate for data updates

>

> Additional outputs to consider:

> - Error messages for handling unavailable data or connection issues

> - Status indicators for data freshness

> - Historical data comparisons (if available)

> - Weather alerts or warnings

#### Step 3: Work Problem by Hand

**Human prompt:**Before we start coding, I want to sketch out the user interaction flow for the dashboard:

- User starts program

- Program displays welcome and prompts for location

- User enters location

- Program fetches current weather data

- Program displays main dashboard with:

- Current temperature and conditions

- Today’s high/low

- Humidity and wind

- Simple 3-day forecast

- Program shows menu options:

- View detailed forecast

- View temperature graph

- Change location

- Exit

- User selects option

- Program displays selected information

- Return to menu (step 6)

Does this flow make sense? Would you structure the user experience differently?

#### Step 4: Write Pseudocode

**Human prompt:**Now that we have a clear understanding of the problem and user flow, could you help me write pseudocode for this weather dashboard? I want to focus on creating a modular design with separate functions for data retrieval, display, and user interaction.

**AI response:**

```python

# Main program structure

function main():

display_welcome()

location = get_location_from_user()

units = get_preferred_units()

while True:

weather_data = fetch_weather_data(location, units)

display_dashboard(weather_data)

choice = display_menu()

if choice == "forecast":

display_detailed_forecast(weather_data)

else if choice == "graph":

display_temperature_graph(weather_data)

else if choice == "location":

location = get_location_from_user()

else if choice == "refresh":

continue # Will loop back and refresh data

else if choice == "exit":

break

# Data functions

function fetch_weather_data(location, units):

# Use provided weather module to get data

current_weather = weather_module.get_current(location, units)

forecast = weather_module.get_forecast(location, units)

return {current: current_weather, forecast: forecast}

# Display functions

function display_dashboard(weather_data):

clear_screen()

display_current_conditions(weather_data["current"])

display_simple_forecast(weather_data["forecast"])

function display_detailed_forecast(weather_data):

clear_screen()

for day in weather_data["forecast"]:

display_day_forecast(day)

wait_for_user()

function display_temperature_graph(weather_data):

clear_screen()

temperatures = extract_temperatures(weather_data["forecast"])

dates = extract_dates(weather_data["forecast"])

create_line_graph(dates, temperatures)

wait_for_user()

# User interaction functions

function display_menu():

print menu options

return get_valid_choice()

function get_location_from_user():

prompt for location

validate location

return locationFollow-up prompt (challenge):

The pseudocode looks good overall. I noticed that error handling isn't explicitly included. Could you expand the pseudocode to include error handling for:

1. Invalid location input

2. Weather service connection failures

3. Handling missing data in the weather response13.1.2.6 Step 5: Convert to Python Code

The developer starts implementing the solution based on the pseudocode, focusing on modularity, error handling, and user experience.

13.1.2.7 Step 6: Test with Data

Human prompt:

Now I want to test this code with some sample data. Can you provide a sample data structure that would be returned by:

1. weather_api.get_current()

2. weather_api.get_forecast()

Also, what test cases should I consider to ensure my dashboard handles all possible scenarios correctly?AI response: > Here are sample data structures that might be returned by the weather API: > > python > # Sample current weather data > sample_current = { > 'location': 'New York', > 'timestamp': '2023-09-15 14:30', > 'temperature': 22, > 'feels_like': 24, > 'condition': 'Partly Cloudy', > 'humidity': 65, > 'wind_speed': 8, > 'wind_direction': 'NE', > 'pressure': 1012, > 'visibility': 10, > 'uv_index': 5, > 'units': 'metric' > } > > # Sample forecast data (list of days) > sample_forecast = [ > { > 'date': '2023-09-15', > 'day_of_week': 'Friday', > 'high': 24, > 'low': 18, > 'condition': 'Partly Cloudy', > 'precipitation_chance': 20, > 'humidity': 65, > 'wind_speed': 8, > 'wind_direction': 'NE', > 'units': 'metric' > }, > # Additional days would follow... > ] > > > Test cases to consider: > > 1. Happy path: > - Valid location with complete weather data > - Multiple days of forecast > > 2. Data issues: > - Missing fields in current weather > - Partial forecast data available > - Empty forecast list > > 3. Connection issues: > - API timeout > - Service unavailable > > 4. User input scenarios: > - Invalid location name > - Empty input > - Special characters in location name

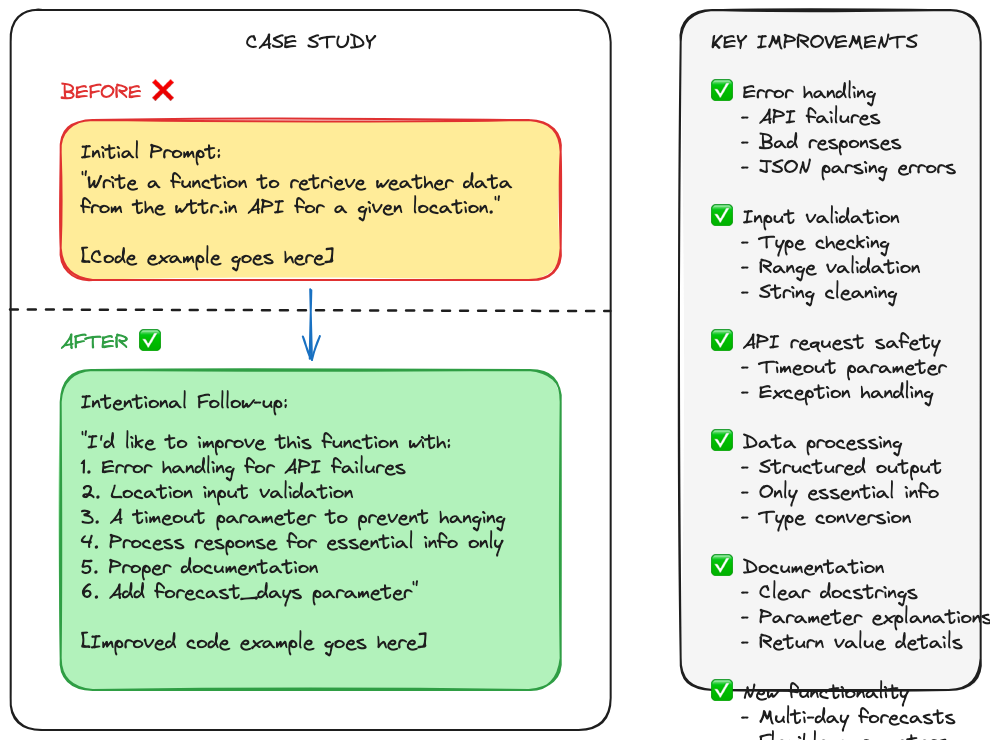

13.1.3 Before and After Example: Improving a Key Function

One of the critical functions in the application is the data retrieval function. Let’s examine how intentional prompting improved this function:

13.1.3.1 Before: Initial Data Retrieval Function

def fetch_weather_data(location, units):

"""Fetch weather data from API."""

current_weather = weather_api.get_current(location, units)

forecast = weather_api.get_forecast(location, units, days=5)

return {"current": current_weather, "forecast": forecast}13.1.3.2 Refinement Process Prompt

The fetch_weather_data() function needs improvement. It doesn't handle errors or validate the data. How can I make it more robust to:

1. Handle connection errors

2. Validate returned data structure

3. Handle missing or incomplete data

4. Provide meaningful error messages to the user13.1.3.3 After: Improved Data Retrieval Function

def fetch_weather_data(location, units):

"""

Fetch weather data from API with comprehensive error handling.

Args:

location: City name or location

units: 'metric' or 'imperial'

Returns:

Dictionary containing current weather and forecast

Raises:

ConnectionError: If unable to connect to weather service

ValueError: If location is invalid or data is incomplete

"""

try:

# Attempt to get current weather

current_weather = weather_api.get_current(location, units)

# Validate current weather data

required_current_fields = ['temperature', 'condition', 'humidity', 'wind_speed']

missing_fields = [field for field in required_current_fields if field not in current_weather]

if missing_fields:

# Handle missing fields by providing defaults

for field in missing_fields:

current_weather[field] = "N/A"

# Log the issue but don't fail completely

print(f"Warning: Some current weather data is unavailable: {', '.join(missing_fields)}")

# Attempt to get forecast with retry mechanism

max_retries = 3

retry_count = 0

forecast = None

while retry_count < max_retries and forecast is None:

try:

forecast = weather_api.get_forecast(location, units, days=5)

break

except Exception as e:

retry_count += 1

if retry_count >= max_retries:

print(f"Warning: Could not retrieve forecast data. Using current conditions only.")

forecast = [] # Empty forecast as fallback

else:

time.sleep(1) # Wait before retrying

# Ensure forecast is a list (even if empty)

if not isinstance(forecast, list):

forecast = []

return {

"current": current_weather,

"forecast": forecast,

"location": location,

"units": units,

"timestamp": time.strftime("%Y-%m-%d %H:%M")

}

except ConnectionError:

raise ConnectionError("Failed to connect to weather service. Please check your internet connection.")

except ValueError as e:

raise ValueError(f"Invalid location: {location}. Please check spelling and try again.")

except Exception as e:

# Convert any other exceptions from the API to something we can handle

raise Exception(f"Error fetching weather data: {str(e)}")13.1.4 Lessons Learned

This case study demonstrates several key aspects of intentional prompting:

- Thorough problem exploration - The developer took time to understand requirements and data structures before coding

- Modular design - The solution was broken down into focused functions with clear responsibilities

- Proactive error handling - Multiple failure scenarios were identified and handled gracefully

- Iterative refinement - The initial simple implementation was systematically improved based on specific requirements

- Test scenario preparation - Sample data and test cases were identified before testing began

The intentional approach resulted in a robust, maintainable application that properly handles edge cases and provides a good user experience, even when dealing with external data sources that may be unreliable.

13.2 Case Study 3: Debugging a Complex Bug

For our final case study, we’ll look at a debugging scenario where intentional prompting techniques help solve a challenging issue.

13.2.1 The Problem

A team is experiencing intermittent failures in their e-commerce checkout system. The checkout process occasionally fails with a generic error message, but only for certain users and at seemingly random times.

13.2.2 The Intentional Prompting Approach

13.2.2.1 Step 1: Restate the Problem

Human prompt:

We're experiencing intermittent failures in our checkout system. Let me restate the problem:

Users occasionally receive a generic "Unable to complete checkout" error when attempting to purchase items. The issue:

- Only affects approximately 5% of checkout attempts

- Seems more common during peak traffic hours

- Doesn't consistently affect the same users

- Doesn't correlate with specific products or payment methods

- Leaves limited information in our standard logs

Our goal is to identify the root cause and implement a fix to eliminate these checkout failures.

Is this an appropriate restatement of the debugging challenge?13.2.2.2 Step 2: Identify Information Needed

Human prompt:

To debug this issue effectively, we need to gather specific information:

INPUTS (Information sources):

- Application logs with enhanced logging enabled

- Database transaction logs

- Server performance metrics during failures

- Network traffic patterns

- User session data for failed checkouts

- Payment processor API logs

OUTPUTS (What we need to determine):

- Exact point of failure in the checkout process

- Conditions that trigger the failure

- Root cause of the issue

- Potential fix or mitigation strategy

Are there other information sources or outputs we should consider to effectively debug this intermittent issue?The debugging case study would continue through a systematic investigation process, demonstrating how intentional prompting techniques help narrow down the cause of complex bugs.

13.3 Key Takeaways

From these case studies, we can extract several important lessons about intentional prompting:

- Structured methodology yields better results

- Following all six steps produces more robust solutions than jumping straight to implementation

- Each step builds on the previous one, creating a solid foundation

- Problem exploration is time well spent

- Restating the problem and identifying inputs/outputs reveals critical requirements

- Manual exploration uncovers edge cases that might otherwise be missed

- AI assistance enhances human capability

- AI helps identify potential issues and optimizations

- The human developer maintains control over the approach and design decisions

- Testing is essential

- Starting with small, synthetic datasets verifies the approach before scaling

- Comprehensive testing reveals potential issues early

- Real-world complexity requires adaptability

- Initial solutions often need refinement as scale and complexity increase

- The methodology provides a framework for iterative improvement

13.4 Moving Forward

In the next chapter, we’ll explore how to scale intentional prompting to complex projects, moving beyond individual functions or modules to entire systems and codebases.