10 Intentional Prompting Patterns

10.1 The Art of Guiding AI

Effective collaboration with AI assistants requires more than just asking for solutions—it demands a thoughtful approach to crafting prompts that guide the AI toward helpful, educational responses. This chapter explores key patterns for intentional prompting that maximize the learning and development value of AI interactions.

10.2 Prompt Engineering: A Foundational Discipline

Prompt engineering has emerged as a critical field in AI interaction, focusing on how to craft precise instructions that help AI models produce accurate, relevant, and contextually appropriate responses. Beyond casual interaction, it encompasses systematic techniques for improving AI output quality.

10.2.1 From Prompt Engineering to Intentional Prompting

It’s important to understand the relationship between prompt engineering and intentional prompting:

Prompt engineering is primarily concerned with getting optimal outputs from AI systems. It focuses on crafting the right words, examples, and instructions to elicit high-quality responses from language models. Prompt engineers develop expertise in understanding model behaviors, leveraging context windows effectively, and using specialized techniques to guide AI outputs.

Intentional prompting incorporates prompt engineering techniques but embeds them within a comprehensive methodology for approaching programming tasks. While prompt engineering asks “How can I get the best output from this AI?”, intentional prompting asks “How can I use this AI as part of a thoughtful development process that maintains my understanding and control?”

The distinction becomes clearer when considering how each approach would handle a complex programming task:

| Aspect | Prompt Engineering Approach | Intentional Prompting Approach |

|---|---|---|

| Initial Task Analysis | Optimize prompt for detailed requirements | Follow Steps 1-2: Restate problem and identify inputs/outputs |

| Problem Understanding | Focus on conveying requirements clearly to AI | Follow Step 3: Work through examples by hand to build understanding |

| Solution Design | Craft prompts to generate complete solutions | Follow Step 4: Create pseudocode before implementation |

| Code Generation | Refine prompts until satisfactory code is produced | Follow Step 5: Use AI to implement pseudocode while maintaining understanding |

| Verification | Prompt AI to validate generated code | Follow Step 6: Rigorously test with data, especially edge cases |

| Learning Outcome | Improvement in prompt crafting skills | Improvement in both programming and AI collaboration skills |

Intentional prompting doesn’t replace prompt engineering—it integrates its techniques within a broader approach that preserves human agency, understanding, and skill development.

10.2.2 Core Prompt Engineering Techniques

10.2.2.1 Zero-Shot Prompting

Zero-shot prompting instructs an AI to perform a task without providing examples within the prompt. This technique leverages the model’s pre-existing knowledge to generate responses to novel tasks.

Example:

Write a function that validates email addresses using regular expressions.Zero-shot prompting works well for common tasks where the AI has extensive training data, but may struggle with specialized or complex tasks.

10.2.2.2 Few-Shot Prompting

This technique provides the model with one or more examples of expected input-output pairs before presenting the actual task. Examples help the model understand the desired format and approach.

Example:

Here's an example of validating a phone number:

Input: "555-123-4567"

Output: Valid (matches pattern XXX-XXX-XXXX)

Input: "5551234567"

Output: Valid (can be reformatted to XXX-XXX-XXXX)

Input: "555-1234"

Output: Invalid (too few digits)

Now, write a function that validates phone numbers according to this logic.Few-shot prompting is particularly valuable for tasks with specific formatting requirements or uncommon patterns.

10.2.2.3 Chain-of-Thought Prompting

Chain-of-Thought (CoT) prompting encourages the model to break down complex reasoning into intermediate steps, leading to more comprehensive and accurate outputs. This technique mimics human reasoning processes.

Example:

Let's solve this step by step: Write a function that finds the longest common subsequence of two strings.

First, let's understand what a subsequence is...

Next, let's think about how to identify common subsequences...

Then, we'll need an algorithm to find the longest one...Chain-of-thought prompting significantly improves performance on problems requiring multi-step reasoning or algorithmic thinking.

10.2.2.4 Role-Based Prompting

Role-based prompting assigns a specific professional or character role to the AI, which helps frame its responses within a particular domain of expertise or perspective.

Example:

As an experienced software architect, analyze this function and suggest improvements for scalability and performance.This technique helps orient the AI toward specific terminology, frameworks, and priorities relevant to the assigned role.

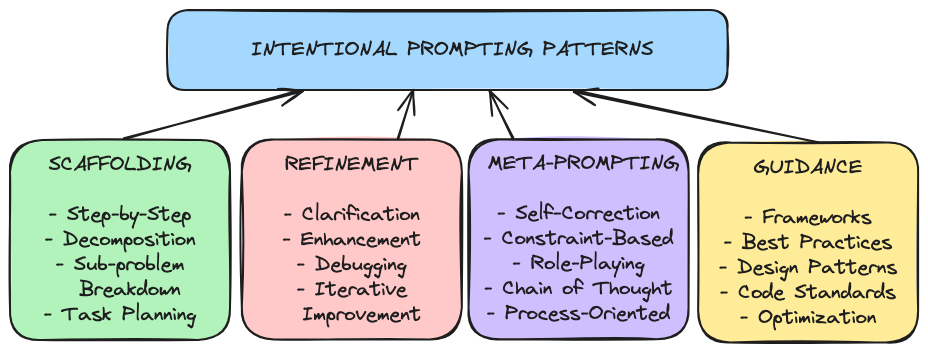

10.3 Types of Intentional Prompts

10.3.1 Foundation Prompts

Foundation prompts establish the baseline requirements for a programming task. Instead of just asking for a solution, these prompts set the stage for a productive dialogue.

Examples:

"I need to write a Python program that analyzes student grades and calculates statistics."

"Can you help me design a function that validates user input according to these requirements..."

"I'm working on a data structure to represent a family tree. What would be a good approach?"Foundation prompts should provide enough context for the AI to understand the goal without being overly prescriptive about implementation details.

10.3.2 Clarification Prompts

Clarification prompts refine requirements and explore edge cases before diving into implementation.

Examples:

"Should the function handle negative numbers, or can we assume all inputs are positive?"

"What's a better approach for storing this data: a nested dictionary or a custom class?"

"How should we handle the case where a user enters text instead of a number?"These prompts encourage thinking about requirements thoroughly before committing to code, a practice that prevents rework and bugs.

10.3.3 Scaffolding Prompts

Scaffolding prompts support learning by breaking down complex concepts into understandable components.

Examples:

"Before we implement this recursion, can you explain how the call stack will work in this case?"

"What's happening in this line of code that uses list comprehension? Can you break it down step by step?"

"Can you show me how this algorithm would process this specific input, step by step?"These prompts transform the AI from a code generator into a tutor that helps build deeper understanding.

10.3.4 Challenge Prompts

Challenge prompts deliberately introduce programming challenges to test understanding and explore potential issues.

Examples:

"What happens if the user enters an empty string here?"

"How would this code handle a very large dataset? Would it still be efficient?"

"Is there a potential race condition in this multithreaded approach?"Challenge prompts help develop critical thinking about code rather than just accepting first solutions.

10.3.5 Refinement Prompts

Refinement prompts push for code improvements based on best practices and efficiency considerations.

Examples:

"Can we make this code more efficient in terms of memory usage?"

"Is there a more idiomatic way to write this in Python?"

"How could we refactor this to improve readability while maintaining functionality?"These prompts help develop an eye for quality and foster continuous improvement.

10.3.6 Error Induction Prompts

Error induction prompts intentionally guide the AI toward making specific mistakes to explore error handling and debugging processes.

Examples:

"Let's use a recursive approach without considering the base case first."

"What if we don't handle the edge case where the input is empty?"

"Let's implement this without worrying about thread safety for now."These prompts create valuable learning opportunities by examining potential failure modes.

10.4 Effective Prompting Patterns

10.4.1 The “What If?” Pattern

- Get working code from the AI

- Ask “What if [edge case]?”

- Evaluate the AI’s solution against your understanding

- Repeat with increasingly complex edge cases

This pattern systematically explores the boundaries of a solution, building robustness and understanding.

10.4.2 The Incremental Building Pattern

- Start with a minimally viable solution

- Add one feature at a time

- Integrate and test after each addition

This pattern mirrors agile development practices, keeping the development process manageable and focused.

10.4.3 The Deliberate Error Pattern

- Let the AI generate a solution

- Identify a potential issue (even if the AI didn’t make the error)

- Ask: “Is there a problem with how this handles [specific case]?”

- Use the discussion to deepen understanding

This pattern develops debugging skills and critical evaluation of code.

10.4.4 The Comparative Analysis Pattern

- Ask the AI to implement a solution two different ways

- Request a comparison of trade-offs between approaches

- Make an informed decision based on the analysis

This pattern builds judgment about different implementation strategies.

10.5 Advanced Prompt Engineering Strategies

Beyond the basic techniques described earlier, several advanced prompt engineering strategies can further enhance your interactions with AI coding assistants:

10.5.1 Context-Enhanced Prompting

This technique involves providing rich background information to help the AI generate more appropriate and contextually relevant responses.

Example:

I'm building a web application with React frontend and Django backend. The application needs to handle both authenticated and unauthenticated users. We're using JWT for authentication.

Now I need to implement a function that checks if a user's token is valid and returns appropriate data based on their permission level.Context-enhanced prompting is particularly valuable when working on components of larger systems where architectural decisions and constraints need to be considered.

10.5.2 Constraint-Based Prompting

By explicitly stating constraints and requirements, you can guide the AI to produce solutions that fit within your project’s specific parameters.

Example:

Write a sorting algorithm that:

- Uses O(n log n) time complexity

- Uses no more than O(1) extra space

- Is stable (maintains relative order of equal elements)

- Works well with partially sorted dataThis approach is especially useful for performance-critical applications or when working within specific technical limitations.

10.5.3 Template-Guided Prompting

Template-guided prompting provides a structural framework that the AI should follow in its response. This ensures consistency and completeness.

Example:

Analyze this function using the following template:

1. Time complexity:

2. Space complexity:

3. Edge cases not handled:

4. Potential optimizations:

5. Clean code suggestions:This strategy helps ensure the AI covers all necessary aspects of a problem rather than focusing only on the most obvious elements.

10.6 Integrating Prompt Engineering with Intentional Prompting

Intentional prompting isn’t an alternative to prompt engineering—it’s an evolution that incorporates prompt engineering techniques within a more comprehensive methodology. This integration allows us to leverage the power of well-crafted prompts while maintaining the educational and developmental benefits of intentional practice.

10.6.1 The Symbiotic Relationship

Prompt engineering provides the tactical tools for effective AI interaction, while intentional prompting provides the strategic framework for applying these tools:

| Prompt Engineering Provides | Intentional Prompting Provides |

|---|---|

| Techniques for precise AI instructions | Framework for maintaining human agency |

| Methods for optimizing AI responses | Structure for educational development |

| Domain-specific prompting strategies | Process that builds understanding |

| Input formatting best practices | Context for when/how to apply techniques |

This relationship means that becoming skilled at intentional prompting requires developing competence in prompt engineering techniques, but applies those techniques within a thoughtful methodology that prioritizes human understanding and agency.

The intentional prompting patterns we’ve explored can be integrated into various workflows and methodologies. While they align particularly well with the six-step programming methodology discussed in this book, their application extends far beyond this specific framework.

10.6.2 Reinforcing the Six-Step Methodology

Intentional prompting naturally reinforces our six-step programming methodology:

- Restate the problem → Use foundation prompts and chain-of-thought techniques to clarify the problem

- Identify input/output → Use clarification prompts and constraint-based prompting to define boundaries

- Work by hand → Do this yourself, then use few-shot prompting to verify understanding

- Write pseudocode → Use template-guided prompting for consistent pseudocode structure

- Convert to code → Apply role-based prompting (e.g., “as an expert Python developer”) for idiomatic code

- Test with data → Combine challenge prompts with context-enhanced prompting for thorough testing

By combining formal prompt engineering techniques with intentional prompting patterns within this methodology, you develop a deeper understanding of programming concepts than simply asking for complete solutions.

10.6.3 Beyond the Six-Step Framework: Universal Application

While our methodology provides a structured approach, the core principles of intentional prompting apply universally across different development approaches and even beyond programming:

10.6.3.1 In Agile Development

- Use foundation and clarification prompts during sprint planning

- Apply challenge prompts during code reviews

- Leverage refinement prompts during refactoring sprints

- Use template-guided prompting for documentation tasks

10.6.3.2 In Domain-Specific Development

- Data Science: Apply chain-of-thought prompting to break down complex analytical problems

- Front-End Development: Use visual constraint prompts for UI implementation

- DevOps: Leverage role-based prompting for security auditing and configuration

- Systems Architecture: Apply comparative analysis prompts when evaluating design alternatives

10.6.3.3 Beyond Programming

The principles of intentional prompting extend to:

- Technical writing: Guiding AI to produce clear, structured documentation

- Educational content: Creating learning materials that build understanding

- Project management: Breaking down complex tasks and planning workflows

- Problem-solving in any domain: Applying structured thinking to any challenge

10.6.4 AI as a Thoughtful Assistant

Across all these applications, intentional prompting embraces AI as a thoughtful assistant rather than a replacement for human judgment. This partnership model is based on five key principles:

- Human-led workflows: The human sets goals, defines parameters, and makes the final decisions

- Critical evaluation: Thoughtfully evaluating AI suggestions based on expertise and context

- Iterative collaboration: Engaging in a refinement process where AI suggestions improve through feedback

- Domain-appropriate trust: Recognizing where AI excels versus where human judgment is essential

- Skill complementarity: Using AI for appropriate tasks while keeping human focus on creative and strategic aspects

By applying these principles across any methodology or domain, intentional prompting creates a collaborative relationship that leverages both human and AI strengths while overcoming their respective limitations.

10.7 Measuring Your Progress

As you practice intentional prompting, you can track your growth:

Beginner Level: - You can identify obvious errors in AI-generated code - You depend on the AI to explain most concepts - Your prompts are simple and general

Intermediate Level: - You can spot subtle logical errors - You ask about specific programming concepts - Your prompts include anticipated edge cases

Advanced Level: - You strategically guide the AI toward teaching moments - You compare multiple solution approaches - Your prompts demonstrate computational thinking - You question efficiency, readability, and best practices

10.8 Case Study: Improving a Weather Data Function

Let’s examine a practical example of intentional prompting that demonstrates how to guide an AI from a basic implementation to a robust solution.

10.8.1 Initial Prompt

A developer starts with a simple request:

Write a function to retrieve weather data from the wttr.in API for a given location.10.8.2 Initial AI-Generated Code

def get_weather(location):

import requests

url = f"https://wttr.in/{location}?format=j1"

response = requests.get(url)

data = response.json()

return data10.8.3 Analysis of the Initial Code

The developer analyzes the initial code and identifies several issues:

- No error handling - will crash if the API is down or returns an error

- No input validation for the location parameter

- Returns the raw API response which is complex and includes unnecessary data

- No timeout parameter which could cause the application to hang

- No docstring or comments explaining how to use the function

10.8.4 Follow-up Prompt with Specific Improvements

The developer then crafts a follow-up prompt that addresses each identified issue:

Thanks for the starting point. I'd like to improve this function with:

1. Error handling for API failures and bad responses

2. Location input validation

3. A timeout parameter to prevent hanging

4. A way to process the response to return only essential weather information

5. Proper documentation with parameters and return values explained

Also, can you make it handle the forecast_days parameter to allow getting forecast for multiple days?10.8.5 Improved AI-Generated Code

def get_weather_data(location, forecast_days=5, timeout=10):

"""

Retrieve weather data for a specified location from wttr.in API.

Args:

location (str): City or location name

forecast_days (int): Number of days to forecast (1-5)

timeout (int): Timeout for API request in seconds

Returns:

dict: Weather data including current conditions and forecast,

or None if an error occurred

"""

import requests

# Input validation

if not location or not isinstance(location, str):

print("Error: Location must be a non-empty string")

return None

if not 1 <= forecast_days <= 5:

print("Error: forecast_days must be between 1 and 5")

forecast_days = min(max(1, forecast_days), 5) # Clamp to valid range

# Clean up the location string

location = location.strip().replace(" ", "+")

try:

# Make request to wttr.in API

url = f"https://wttr.in/{location}?format=j1"

response = requests.get(url, timeout=timeout)

response.raise_for_status() # Raise exception for HTTP errors

# Parse JSON response

data = response.json()

# Process and extract relevant information

result = {

"location": location.replace("+", " "),

"current": {

"temperature": {

"current": int(data["current_condition"][0]["temp_C"]),

"feels_like": int(data["current_condition"][0]["FeelsLikeC"])

},

"condition": data["current_condition"][0]["weatherDesc"][0]["value"],

"humidity": int(data["current_condition"][0]["humidity"]),

"wind": {

"speed": int(data["current_condition"][0]["windspeedKmph"]),

"direction": data["current_condition"][0]["winddir16Point"]

},

"precipitation": float(data["current_condition"][0]["precipMM"])

},

"forecast": []

}

# Add forecast data

for i in range(min(forecast_days, len(data["weather"]))):

day = data["weather"][i]

forecast_day = {

"date": day["date"],

"max_temp": int(day["maxtempC"]),

"min_temp": int(day["mintempC"]),

"condition": day["hourly"][4]["weatherDesc"][0]["value"], # Midday condition

"precipitation": {

"chance": int(day["hourly"][4]["chanceofrain"]),

"amount": float(day["hourly"][4]["precipMM"])

},

"wind": {

"speed": int(day["hourly"][4]["windspeedKmph"]),

"direction": day["hourly"][4]["winddir16Point"]

}

}

result["forecast"].append(forecast_day)

return result

except requests.exceptions.RequestException as e:

print(f"Error retrieving weather data: {e}")

return None

except (KeyError, ValueError, TypeError) as e:

print(f"Error processing weather data: {e}")

return None10.8.6 Why This Prompting Strategy Was Effective

The follow-up prompt was effective because:

- It identified specific problems in the initial code rather than just asking for “better code”

- It requested specific improvements with clear objectives (error handling, validation, etc.)

- It suggested a new feature (forecast_days parameter) that made the function more useful

- It asked for proper documentation which resulted in comprehensive docstrings

This approach guided the AI to generate a much more robust function that:

- Is properly documented

- Validates inputs

- Has comprehensive error handling

- Returns structured, processed data instead of raw API response

- Includes the new forecast functionality requested

The key to effective prompting was being specific about what needed improvement and why, rather than making vague requests for “better” code.

10.9 Key Takeaways

- The goal is not to get a perfect solution from the AI, but to use the interaction to deepen your understanding

- Your programming knowledge is demonstrated by the quality of your prompts

- Intentionally introducing challenges and constraints leads to better learning outcomes

- The best programmers aren’t those who know all the answers, but those who know how to ask the right questions

- Specific, targeted follow-up prompts yield much better results than vague requests for improvement

In the next chapter, we’ll explore how these prompting patterns can be applied specifically to debugging tasks, creating a powerful workflow for solving problems in your code.