3 Principles of Intentional Prompting

3.1 The Spectrum of AI Integration Approaches

Before diving into the core principles of intentional prompting, it’s important to understand the broader landscape of approaches to integrating AI into development workflows. These approaches reflect different philosophies about the role of AI and human developers.

3.1.1 Permissive Approach: “Just Take the Answer”

The permissive approach involves accepting AI outputs with minimal human oversight or intervention. In this model, developers largely defer to AI-generated recommendations, content, and decisions.

Advantages: - Maximum efficiency and speed in completing tasks - Reduced cognitive load on humans - Quick access to AI capabilities without friction - Easier adoption for non-technical users

Limitations and Risks: - Potential propagation of AI errors or biases - Limited human learning and skill development - Reduced critical thinking and problem-solving practice - Overreliance may lead to degraded human capabilities over time - Lack of contextual understanding in complex situations

This approach aligns closely with the “vibe coding” philosophy discussed in the previous chapter, prioritizing speed and output over process and understanding.

3.1.2 Dismissive Approach: “Reject AI Outright”

At the opposite end of the spectrum is the dismissive approach, characterized by skepticism or outright rejection of AI tools. This stance prioritizes traditional methods and maintains full human control.

Advantages: - Maintaining full human control and autonomy - Preserving traditional skills and methods - Avoiding risks associated with AI errors - Clear human accountability and ownership - Preserving jobs and roles that might otherwise be automated

Limitations: - Missing potential productivity and quality improvements - Requiring more human time and resources - Unnecessary repetitive or mechanical work for employees - Limited access to AI’s data processing and pattern recognition capabilities

3.1.3 Collaborative Approach: “Human-Directed AI Assistance”

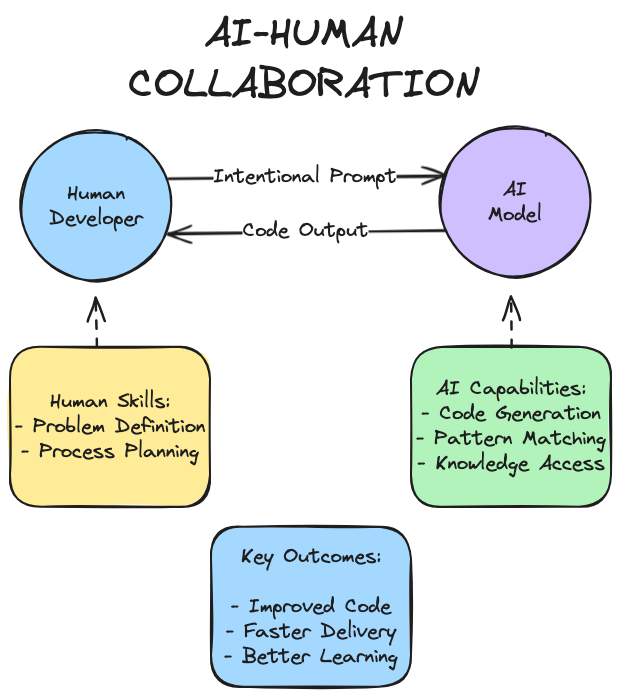

Between these extremes lies the collaborative approach, which views AI as a tool that enhances human capabilities without replacing human judgment. This middle-ground philosophy establishes a partnership where humans direct AI systems, critically evaluate their outputs, and maintain decision-making authority.

Advantages: - Combining human judgment with AI efficiency - Maintaining human oversight while leveraging AI strengths - Enabling iterative improvement through feedback - Preserving human agency and accountability - Creating opportunities for human upskilling alongside AI use

Challenges: - Requires more thought and time than pure acceptance - Demands both AI literacy and domain expertise - Needs more complex workflows and training - Requires clear frameworks for when to trust or question AI outputs

Intentional prompting sits firmly within this collaborative approach, viewing AI as a thoughtful assistant rather than a replacement for human judgment.

3.1.4 Comparing Intentional Prompting vs. Vibe Programming

To illustrate the differences in these approaches, the following table compares intentional prompting with vibe programming across several important dimensions:

| Dimension | Intentional Prompting | Vibe Programming |

|---|---|---|

| Primary Goal | Understanding and skill development alongside output | Speed and output production |

| Development Process | Structured, methodical approach with defined steps | Rapid, conversational, minimal planning |

| Human Involvement | Human directs process and makes key decisions | Human describes desired outcome, AI leads implementation |

| Code Review | Thorough review with critical evaluation | Minimal review, focus on functionality over quality |

| Debugging Approach | Systematic investigation based on understanding | Trial-and-error, asking AI to fix issues |

| Learning Outcome | Progressive skill development and deeper understanding | Potentially limited technical growth, focus on prompt crafting |

| Code Quality | Optimized for readability, maintainability, efficiency | Prioritizes working functionality over optimality |

| Ideal Use Cases | Complex systems, educational contexts, enterprise software | Rapid prototyping, MVPs, personal projects, non-critical applications |

| Knowledge Transfer | Facilitates team understanding and knowledge sharing | May create knowledge silos where only original developer understands prompting approach |

| Maintenance | Designed for long-term maintenance and evolution | May present challenges for future maintenance or extension |

This comparison isn’t meant to suggest that one approach is universally superior to the other. Rather, each has contexts where it may be more appropriate. Intentional prompting offers significant advantages for educational settings, complex systems, team environments, and situations where code quality and maintainability are paramount.

3.1.5 Example: Different Approaches to the Same Challenge

To make these differences concrete, let’s examine how the permissive (vibe coding) and collaborative (intentional prompting) approaches would handle the same programming challenge: creating a function to validate email addresses.

3.1.5.1 Permissive Approach (Vibe Coding)

Human: Write a function that validates email addresses.

AI generates:

def validate_email(email):

import re

pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

return bool(re.match(pattern, email))Human: It’s not working for some emails with plus signs. Fix it.

AI generates updated code:

def validate_email(email):

import re

pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

return bool(re.match(pattern, email))Human: It’s still not working for email+alias@domain.com. Can you fix it?

AI revises again:

def validate_email(email):

import re

# Complex pattern handling all edge cases

pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

return bool(re.match(pattern, email))The permissive approach emphasizes speed but may involve several iterations of trial and error without deepening understanding of the problem or solution.

3.1.5.2 Collaborative Approach (Intentional Prompting)

Human: I need to create an email validation function. Before writing code, let’s discuss what makes a valid email address and identify the key requirements.

AI: Provides explanation of email format requirements and edge cases

Human: Based on those requirements, let’s brainstorm approaches. What are the trade-offs between using regular expressions versus parsing the parts individually?

AI: Explains different approaches with their advantages and limitations

Human: I’ll choose the regex approach for simplicity. Let’s write pseudocode first:

function validateEmail(email):

if email is empty:

return false

if email doesn't contain exactly one @ symbol:

return false

split email into local and domain parts

check if local part meets requirements

check if domain part has at least one period and valid format

return true if all checks passAI: Provides feedback on pseudocode and suggests improvements

Human: Now let’s implement this in Python with a clear approach:

def validate_email(email):

"""

Validates an email address according to RFC 5322 standards.

Args:

email: String to validate as an email address

Returns:

bool: True if the email is valid, False otherwise

"""

import re

# Basic pattern that handles most common email formats

pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

# Initial validity check

if not email or not isinstance(email, str):

return False

return bool(re.match(pattern, email))Human: Let’s test this with various cases including edge cases: - user@example.com - user+alias@example.com - user.name@example.co.uk - user@sub.example.com - incomplete-email-without-domain\@ - incomplete-email-without-username\@example.com - user\@incomplete-domain

The collaborative approach takes longer initially but builds understanding, produces better-documented code, and addresses edge cases proactively rather than reactively.

This example illustrates how the different approaches affect not just the final code but the entire development process and learning experience.

3.2 Maintaining Human Agency

At the core of intentional prompting is the principle of human agency - the programmer remains the architect and decision-maker throughout the development process, not just a consumer of AI-generated solutions.

3.2.1 Directive vs. Delegative Approaches

There are two fundamental ways to interact with AI coding assistants:

Delegative Approach: Handing off problems entirely to the AI and accepting its solutions with minimal scrutiny or direction.

Directive Approach: Guiding the AI through a structured process, maintaining control over architecture and design decisions, and critically evaluating its outputs.

Intentional prompting emphasizes the directive approach, where you:

- Break down problems before presenting them to AI

- Provide clear constraints and requirements

- Review and question AI-generated code

- Make deliberate decisions about when and how to incorporate AI suggestions

3.2.2 Techniques for Maintaining Agency

- Frame the problem yourself before asking the AI for help

- Establish evaluation criteria for solutions before generating them

- Request multiple approaches to avoid anchoring on the first solution

- Question assumptions in AI-generated code

- Make final integration decisions based on your understanding, not convenience

3.3 Understanding vs. Copying

A critical distinction in intentional prompting is the difference between understanding a solution and merely copying it. The goal is not just working code, but comprehension that builds long-term programming capabilities.

3.3.1 The “Black Box” Problem

When developers copy AI-generated code without understanding it, they create “black boxes” in their codebase - components they can’t effectively debug, maintain, or explain. Over time, this leads to brittle systems and stunted professional growth.

3.3.2 Signs of Understanding

You understand code when you can: - Explain how it works to someone else - Modify it confidently to handle new requirements - Identify potential edge cases it might not handle - Recognize its performance characteristics and limitations - Connect it to broader programming concepts and patterns

3.3.3 Strategies for Building Understanding

- Request explanations of generated code

- Ask “what if” questions about edge cases or modifications

- Trace through execution with specific examples

- Modify the code to handle different scenarios

- Compare different implementations of the same functionality

3.4 Process Over Output

Intentional prompting emphasizes the development process rather than just the final code. This focus on process leads to better long-term outcomes in both code quality and developer growth.

3.4.1 Why Process Matters

- Better architecture: A thoughtful process leads to better-designed code

- Fewer bugs: Systematic approaches catch edge cases that rushed solutions miss

- Easier maintenance: Code developed through a clear process is typically more readable and maintainable

- Knowledge transfer: Process-focused development makes it easier to onboard others

- Skill development: Focusing on process builds transferable skills rather than point solutions

3.4.2 The Six-Step Process

The six-step programming methodology (which we’ll explore in depth in Part 2) provides a structured process that works with or without AI assistance:

- Restate the problem

- Identify inputs and outputs

- Work through examples by hand

- Write pseudocode

- Convert to working code

- Test thoroughly

3.4.3 Integrating AI Into Your Process

Rather than replacing your process, AI should enhance it: - Use AI to explore problem variations during problem restatement - Generate test cases during input/output identification - Verify your manual examples - Suggest and refine pseudocode - Help convert pseudocode to working implementations - Generate comprehensive test cases

3.5 The Learning Mindset

Intentional prompting is fundamentally about continuous learning rather than just task completion. This mindset treats every programming challenge as an opportunity to deepen understanding and build skills.

3.5.1 Characteristics of a Learning Mindset

- Curiosity about how and why solutions work

- Comfort with not knowing everything immediately

- Desire to understand deeply rather than just solve the immediate problem

- Willingness to explore alternatives even after finding a working solution

- Reflection on the development process to improve future approaches

3.5.2 AI as a Learning Partner

When approached with a learning mindset, AI assistants become powerful learning tools: - Use AI to explore concepts you don’t fully understand - Ask AI to compare different approaches and explain tradeoffs - Request explanations of unfamiliar code patterns - Use AI to find gaps in your understanding - Challenge AI-generated solutions to deepen your own thinking

3.5.3 Intentional Learning Techniques

- Concept exploration: Ask the AI to explain concepts in multiple ways

- Implementation comparison: Request different implementations of the same functionality

- Knowledge testing: Explain a concept to the AI and ask for feedback

- Deliberate challenge: Introduce constraints that force exploration of new approaches

- Reflective questioning: Ask “why” questions about code decisions

3.6 Ethical Considerations

Intentional prompting includes ethical considerations about the use of AI in the development process.

3.6.1 Attribution and Transparency

- Be transparent about AI contributions to your code

- Understand your organization’s policies about AI-assisted development

- Consider adding attribution comments for significant AI contributions

- Maintain clear documentation of human design decisions

3.6.2 Security and Quality Responsibility

- Always review AI-generated code for security vulnerabilities

- Never delegate final quality assurance to AI tools

- Maintain awareness of common security issues in AI-generated code

- Establish clear review processes for AI-assisted development

3.6.3 Bias and Fairness

- Be aware that AI tools may embed biases from their training data

- Review code for fairness issues, especially in user-facing features

- Consider diverse perspectives when evaluating AI-generated solutions

- Question assumptions that might embed problematic patterns

3.6.4 Professional Development Balance

- Balance efficiency gains from AI with skill development needs

- Identify core skills you want to strengthen, even with AI assistance

- Create intentional learning projects where you limit AI assistance

- Use AI to stretch beyond your current capabilities rather than stay within them

3.7 Addressing Common Concerns and Resistance to LLMs

Despite their utility, Large Language Models face resistance from many programmers and educators. Some of these concerns have deep historical roots, while others arise from the unique characteristics of modern LLMs. Understanding and addressing these concerns is essential for effective adoption of intentional prompting.

3.7.1 The Ambiguity of Natural Language

As far back as 1977, computer scientist Edsger W. Dijkstra presented a compelling critique of natural language programming in his essay “On the foolishness of ‘natural language programming’”. Dijkstra argued that programming fundamentally requires “the care and accuracy that is characteristic for the use of any formal symbolism” and that the inherent ambiguity of natural language made it unsuitable for the precision required in programming.

Dijkstra’s concerns were valid for his time, but modern LLM-based approaches offer new possibilities through iterative refinement processes:

- Progressive disambiguation - Iterative approaches provide mechanisms to gradually eliminate ambiguities through multiple rounds of interaction, transforming imprecise natural language into precise formal representations

- Structured frameworks - Methodologies like intentional prompting add structure to otherwise ambiguous interactions

- Human-in-the-loop validation - The human programmer validates outputs and maintains final authority over implementation decisions

The intentional prompting methodology directly addresses Dijkstra’s concerns by providing a framework that bridges natural language and formal code, using the former as an entry point rather than a replacement for the latter.

3.7.2 The Non-Deterministic Nature of LLMs

Another significant concern involves the non-deterministic behavior of LLMs—the fact that they can produce different outputs even when given the same input. This unpredictability raises legitimate questions about reliability, especially in mission-critical applications.

Research has documented significant variations in LLM performance across multiple runs, with accuracy varying up to 15% and gaps between best and worst performance reaching as high as 70%. This variability stems from:

- Input interpretation variability - LLMs may interpret the same natural language prompt differently across different runs

- Output generation variability - Even with the same interpretation, the code generated may vary due to sampling methods

The intentional prompting methodology addresses these concerns through:

- Structured evaluation - The methodology provides clear criteria for evaluating generated code

- Explicit testing - Step 6 (Test with Data) ensures thorough validation of any generated solution

- Human oversight - The human programmer maintains control over the development process, reviewing and modifying generated code as needed

- Iterative refinement - The methodology embraces multiple iterations to converge on reliable solutions

3.7.3 Procedural Knowledge Transfer

Interestingly, LLMs excel at procedural tasks because they’ve inherited human problem-solving patterns through their training data. Research shows that LLMs have absorbed procedural knowledge through exposure to:

- Error-checking protocols from technical manuals

- Creative iteration cycles in writing samples

- Mathematical proof structures in STEM literature

- Software engineering best practices from code repositories

This explains why models respond well to structured methodologies like intentional prompting—they’re activating latent procedural knowledge that mirrors human problem-solving approaches.

The six-step methodology leverages this characteristic by providing a framework that:

- Activates the model’s latent understanding of systematic problem-solving

- Provides clear procedural guidance that aligns with effective human workflows

- Creates a shared procedural language between human and AI

3.7.4 Finding the Right Balance

The most effective approach to LLM integration lies in finding the right balance between permissive acceptance and dismissive rejection. The intentional prompting methodology represents this balanced middle ground:

- It acknowledges LLMs’ limitations regarding ambiguity and non-determinism

- It establishes guardrails through a structured methodology

- It leverages LLMs’ strengths in pattern recognition and procedural knowledge

- It maintains human agency and oversight throughout the development process

By addressing these concerns directly and providing a structured framework for human-AI collaboration, intentional prompting offers a pragmatic approach that captures the benefits of AI assistance while mitigating its risks.

3.8 Putting Principles Into Practice

These principles—maintaining agency, understanding vs. copying, process over output, the learning mindset, ethical considerations, and addressing common concerns—form the foundation of intentional prompting.

In the next section, we’ll explore how these principles are applied through the six-step programming methodology, providing a structured approach to developing software with AI assistance.